Journal of Medical Research and Surgery

PROVIDES A UNIQUE PLATFORM TO PUBLISH ORIGINAL RESEARCH AND REMODEL THE KNOWLEDGE IN THE AREA OF MEDICAL AND SURGERY

Journal of Medical Research and Surgery

PROVIDES A UNIQUE PLATFORM TO PUBLISH ORIGINAL RESEARCH AND REMODEL THE KNOWLEDGE IN THE AREA OF MEDICAL AND SURGERY

Journal of Medical Research and Surgery

PROVIDES A UNIQUE PLATFORM TO PUBLISH ORIGINAL RESEARCH AND REMODEL THE KNOWLEDGE IN THE AREA OF MEDICAL AND SURGERY

Journal of Medical Research and Surgery

PROVIDES A UNIQUE PLATFORM TO PUBLISH ORIGINAL RESEARCH AND REMODEL THE KNOWLEDGE IN THE AREA OF MEDICAL AND SURGERY

Indexed Articles

Indexed ArticlesSelect your language of interest to view the total content in your interested language

Yasrab Ismail1,2* , Sanobar Bughio1

, Sanobar Bughio1 , Najeeb Ahmed3, Aqsa Munir3, Fatma qaiser4, Afshan sheikh1

, Najeeb Ahmed3, Aqsa Munir3, Fatma qaiser4, Afshan sheikh1

1Consultant Radiologist, Dr Ziauddin Hospital, Karachi, Pakistan.

2Consultant Radiologist Craigavon Area Hospital, Northern Ireland, United Kingdom.

3Liaquat University of Medical and Health Sciences, Jamshoro, Sindh, Pakistan.

4Aga Khan University Hospital, Karachi, Pakistan.

Correspondence to: Yasrab Ismail, Consultant Radiologist, Dr Ziauddin Hospital, Karachi, Pakistan.

Received date: February 15, 2024; Accepted date: March 06, 2024; Published date: March 13, 2024

Citation: Ismail Y, Bughio S, Ahmed N, et al. The Inter-Observer Agreement Between Resident and Consultant Radiologists in Reporting Emergency Head CT Scans. J Med Res Surg. 2024;5(1):17-21. doi: 10.52916/jmrs244130

Copyright: ©2024 Ismail Y, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted

use, distribution and reproduction in any medium, provided the original author and source are credited.

Objectives: To determine the inter-observer agreement between resident and consultant radiologists in reporting emergency head CT scans.

Materials and Methods: This descriptive cross-sectional retrospective study was performed in a tertiary care hospital Karachi from 1st October 2021 to 31st march 2022. Total of 111 number of patients of 18-70 years of age of either gender who underwent emergency Head CT scans were included. Patients who came for follow-up CT scans were excluded. CT findings were interpreted by the radiology resident on duty. Subsequently, these CT images were interpreted by a consultant radiologist, and the decision of both resident and faculty member CT was correlated for inter-observer agreement.

Results: The mean age was 47.47 ± 14.20 years. The majority of the patients 46 (41.44%) were between 56 to 70 years of age. Out of these 111 patients, 72 (64.86%) were male and 39 (35.14%) were female with a ratio of 1.9:1. Discrepancy between resident and consultant radiologists in reporting emergency head CT scans is seen in 6 (5.41%) patients. Inter-observer agreement between resident and consultant radiologists in reporting emergency head CT scans was found to be 94.59% with a kappa “κ” value of 0.885 which showed a very strong agreement.

Conclusion: This study concluded that little discrepancy was found in inter-observer agreement between the radiology residents and the faculty members for interpretation of CT images of Head.

Head CT, Resident, Discrepancy, X-rays, Emergency.

Physician competency is a critical factor in the overall quality of medical diagnostics and accuracy of interpretation is the key determinant of their competency [1]. The process of quality assurance in the practice of radiology is important to ensure high level patient care and is rapidly recognized at the institutional levels. From a quality assurance point of view, the report should be the true right one and discrepancies and errors must be minimized [2]. To monitor the skills of radiologists effectively, it is essential to comprehend the basic rate of discrepancies in interpreting imaging examinations [3]. Discrepancies between initial and subsequent interpretations by radiologists may arise from several factors, such as insufficient clinical information, suboptimal imaging techniques, perceptual errors, and communication breakdowns [4]. A commonly accepted method or protocol for assessing mistakes and inconsistencies in imaging reports has yet to be established, as there is no unanimous agreement on the matter [5]. The wide spectrum of study results in medical research can often be attributed to multiple variations in study parameters. These discrepancies can encompass a range of factors, such as variances in the sources of samples, techniques used, imaging modes employed, areas of expertise, classification systems, interpreter training levels, and degree of blinding, among other things [2].

Computed tomography, also known as CT scanning, is a medical imaging technique that involves the use of X-rays to create multiple images of the internal organs and structures of the body. These images are then combined using a computer to produce cross-sectional views and, if required, three dimensional images [3].

Over the past few years, CT scan has gained popularity as the preferred diagnostic method for numerous clinical scenarios and is easily accessible, even in smaller medical facilities lacking onsite radiologists.

Head CT scan is a common diagnostic tool used in emergency departments to evaluate patients with head injuries. In many cases, the results of a head CT scan can provide important information. often necessary for emergency doctors to interpret the CT scan results quickly and initiate appropriate treatment before the formal radiologist's report is available. Radiological interpretation is necessary to confirm the initial diagnosis and guide further management [6].

Although precise brain CT scan interpretation by emergency physicians is extremely important, many EM residency programs do not devote enough time to training in this area [7].

The objective of our study was to determine the discrepancy rate and inter-observer agreement in reporting the CT scan of Head in our setting. This will help us in identifying the areas which needs to be improved in the training of radiologists to minimize the errors in reporting. This would eventually be helpful for the better management of these patients.This cross-sectional study was performed in a tertiary care hospital of Karachi from 1st October 2021 to 31st march 2022. Adult male and female patients with 18 to 70 years of age. who underwent head CT scans were included in this study. Those Patients who will come for follow up CT scan or where we will not find all reports sets (resident and consultant) were excluded from study. Sample size was estimated using online available calculator (REFa). Minimum acceptable Kappa and expected kappa were 0.9 and 0.69 (REFB) respectively. Proportion of patients with no abnormal findings with emergent head CT scan was taken as 64% from a previous study (REFc). At of power of 80% and 95% confidence interval, with 10% drop out rate, the calculation sample size was 42. However, for better results, we enrolled total 111 patients.

All examinations were performed on multidetector Asteion™ 16 (Toshiba, Japan). The CT protocol included scanning from the base of the skull Axial slices were obtained with a slice thickness of 2 mm, the pitch of 2, at 120 kvp, 250 mAs, and medium field of view. The sagittal and coronal images were reconstructed. The selected Head CT scans were interpreted within two consecutive days. The CT was interpreted by the resident radiologist first and then by the consultant radiologist on the same or next day who were blinded of trainee’s report. Both the reports were analyzed by the trainee researcher.

Discrepancies in reporting was defined as:

Data was entered on computer software SPSS version 20. Quantitative variables like age of the patient were measured as mean ± SD. Qualitative variables like indications for head CT, gender of patient, year of resident radiologist and discrepancies were measured as frequency and percentages.

Stratification with respect to age, indications for head CT, gender of patient, year of trainee radiologist and agreement between the findings of reports of trainees and consultant radiologist for each discrepancy was calculated. “Post-stratification chi-square test was applied to see the significance”. Agreement between trainee and consultant radiologist was measured through kappa “κ” statistics. Results were interpreted for each discrepancy as.

Total 111 patients were included in the study. Out of 111 patients, 72 (64.86%) were male and 39 (35.14%) were female, with a male to female ratio of 1.9:1. The age range of participants in this study was 18 to 70 years, with a mean age of 47.47 ± 14.20 years. The majority of patients (46, 41.44%) were aged between 56 and 70 years. Distribution of patients according to indications for CT and year of resident is shown in Table 1 and 2 respectively.

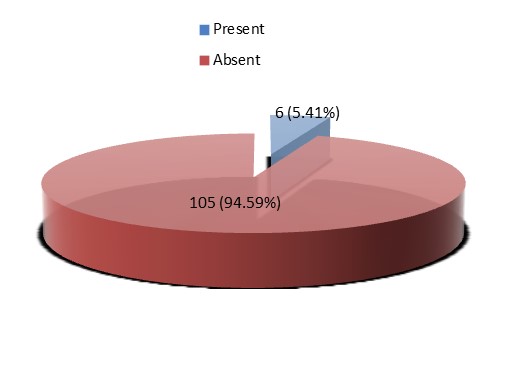

Discrepancy between resident and consultant radiologists in reporting emergency head CT scans is seen in 6 (5.41%) patients as shown in Figure 1. Discrepancy between resident and consultant radiologists in category 1 and 2 is shown in Figure 2. Inter-observer agreement between resident and consultant radiologists in reporting emergency head CT scans was found to be 94.59% with kappa “κ” value of 0.885 which showed a very strong agreement.

Table 3 have shown the stratification of discrepancy in category 1 and 2 with respect to year of resident. Stratification of discrepancy in category 1 and 2 with respect to age groups is shown in Table 4.

|

Indications |

No. of Patients |

% Age |

|

Trauma |

46 |

41.44 |

|

Stroke |

37 |

33.33 |

|

Fits |

15 |

13.51 |

|

Others |

13 |

11.71 |

|

Year of resident radiologist |

Total Patients |

Percentage |

|

2 |

22 |

19.82 |

|

3 |

40 |

36.04 |

|

4 |

49 |

44.14 |

Figure 1: Discrepancy between resident and consultant radiologists in

reporting emergency head CT scans (n=111).

Figure 1: Discrepancy between resident and consultant radiologists in

reporting emergency head CT scans (n=111).|

Category 1 |

|

|||

|

Resident |

Discrepancy |

P-value |

Kappa value |

|

|

Present n (%) |

Absent n (%) |

|||

|

2nd year |

2 (14.3) |

12 (85.7) |

0.001 |

0.92 |

|

3rd year |

0 (0) |

11 (100) |

||

|

4th year |

0 (0) |

16 (100) |

||

|

Category 2 |

|

|||

|

Resident |

Discrepancy |

P-value |

Kappa value |

|

|

Present n (%) |

Absent n (%) |

|||

|

2nd year |

2 (25) |

6 (75) |

0.461 |

0.86 |

|

3rd year |

1 (3.4) |

28 (96.6) |

||

|

4th year |

1 (3) |

32 (97) |

||

|

Patient Age |

Resident |

Discrepancy |

P and Kappa |

P and Kappa |

|||||

|

Category 1 |

Category 2 |

Category 1 |

Category 2 |

||||||

|

Present |

Absent |

Present |

Absent |

||||||

|

18-35 |

2nd year |

0 (0) |

1 (100) |

0 (0) |

8 (100) |

1 |

1 |

0.178 |

0.96 |

|

3rd year |

0 (0) |

4 (100) |

0 (0) |

5 (100) |

|||||

|

4th year |

0 (0) |

4 (100) |

1 (25) |

3 (75) |

|||||

|

36-55 |

2nd year |

1 (50) |

1 (50) |

2 (33) |

4 (67) |

0.019 |

0.96 |

0.053 |

0.86 |

|

3rd year |

0 (0) |

8 (100) |

0 (0) |

7 (100) |

|||||

|

4th year |

0 (0) |

7 (100) |

0 (0) |

9 (100) |

|||||

|

55-70 |

2nd year |

0 (0) |

1 (100) |

0 (0) |

3 (100) |

0.031 |

0.96 |

0.391 |

0.96 |

|

3rd year |

0 (0) |

5 (100) |

1 (2) |

10 (9) |

|||||

|

4th year |

0 (0) |

8 (100) |

0 (0) |

17 (100) |

|||||

“The Non-Contrast CT (NCCT) Brain is a radiological test” frequently utilized in Emergency Departments (EDs) to evaluate neurological and traumatic symptoms, regardless of whether they are critical or non-critical cases. In situations where time is critical, Emergency Physicians (EPs) must quickly respond based on the findings of relevant investigations [8].

We have compared with Several research studies have investigated the explanation of radiological scans in emergency departments, exploring different imaging techniques and highlighting differences between the evaluations of resident and consultant radiologists. In our study, the objective was to evaluate the level of agreement between resident and consultant radiologists when reporting emergency head CT scans.

In our study inter-observer agreement between resident and consultant radiologists in reporting emergency head CT scans was found to be 94.59% with kappa “κ” value of 0.885 which showed a very positive correlation with study by Zan, et al. [9] in which they examined 4534 neuroradiology cases that had been evaluated by an outside institute. According to the study, a total of 347 cases (7.7%) showed substantial differences between non-specialist radiologists and neuroradiologists with specialized training.

Arendts G, et al. [10] found that out of 1282 scans, 190 were misinterpreted, with 78 having the potential for acute consequences. Mucci B, et al. [11] study involving 100 consecutive scans found an agreement of 86.6% between residents and consultant radiologists. Khoo NC, et at. [12] study of 287 scans involving residents and registrars, 32 were found to be false negatives. However, differing findings were present in relation to X-rays and CTs across section on various studies [13,14].

Viertel VG, et al. conducted a study on academic neuroradiologists they found that there was a rate of 1.8% for clinically significant interpretation discrepancies [9]. Study conducted by Guérin G, et al. regarding inter-observer agreement and concordance on head CT studies. with positive and negative results for clinically pertinent findings was 0.86 (0.77–0.95) but concordance was only 75.6% (67.2%–82.5%) [15].

Based on the level of experience of residents during their training, the interobserver agreement on CTPA interpretation improved between the Radiologists and the residents training level. The agreement in findings was better between senior residents and the Radiologist on comparison among junior residents and the Radiologist. This can be relatable to better knowledge of anatomy and imaging interpretation by the senior residents as mentioned by Joshi, et al. [16] due to their improved skills. In addition, Radiology residents require on-call work to enhance their experience and build confidence in interpreting medical images [17,18].

A prospective evaluation research by Wysoki Get, et al. [19] had 419 consecutive emergency posttraumatic cranial CT images performed. There was very little disagreement between staff neuroradiologists and radiology residents' readings of posttraumatic cranial CT imaging. Radiology on-call residents assessed these scans, and inconsistencies between their interpretations and those of the professional radiologists were discovered. These differences were divided into two categories: failing to detect an abnormality (false-negative findings) and misinterpreting a normal finding as abnormal (False-positive findings).

Major discrepancies were those that could have an impact on patient treatment in an emergency situation, while small discrepancies did not. The percentage of major and minor differences between resident and staff radiologists' interpretations was 1.7% and 2.6%, respectively. Statistically, the disparity rate was considerable, with no change in treatment attributed to the delay in diagnosis.

Study conducted by Stevens, et al. [20]. The goal of his study was to find any inconsistencies between the initial reports from radiology residents and the final reports from the attending radiologists. Similar grading procedures and an ED chart review were used by the study's authors to identify differences. A total of 2.0% of the studies were found to have discrepancies, with 1.6% of them showing considerable discordance and 0.43% necessitating an immediate management adjustment. This evidence indicates that there were situations where the radiology resident's initial report and the attending radiologist's final report changed conspicuously, and that in some cases this disparity resulted in a change in patient therapy.

Ruchman, et al. [21] he studied neuroradiological CT scans his study found that only 0.08% of cases showed a significant variation in patient conclusion which showed agreement with our study.This study concluded that mild discrepancy was found in inter-observer agreement between the radiology residents and the faculty members for interpretation of CT images of Head.

The authors of the paper you are referring to have declared no conflicts of interest or financial gain.

No.